|

© Jonathan Dalar |

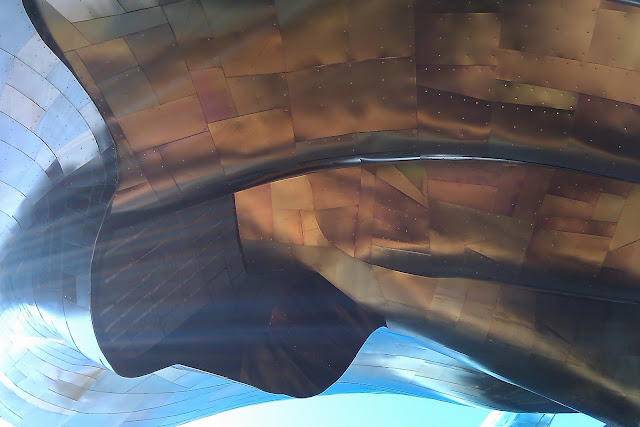

The building was originally built for the Experience Music Project, which was a way for creator Paul Allen to bring something to the local community that wasn't available anywhere else. His vision was to engage people, inspire them, and get them excited about music, not just show them artifacts in a museum. He expanded this concept with the addition of the Science Fiction Museum and Hall of Fame in 2004, bringing us exposure to artifacts and unique memorabilia we otherwise wouldn't have been able to see.

|

© Jonathan Dalar |

The museum has a very large number of artifacts, but shows very few at a time. Not only is this done to preserve the artifacts, so as to not display them for a long time, it's also to continue to engage a large membership and refresh exhibits with a variety of content. After all, who wants to go see the same old thing all the time?

The Science Fiction Hall of Fame is currently closed, as it is being renovated along with the rest of the downstairs in preparation for the new horror exhibit opening soon. That was a disappointment to me, because as a writer of science fiction, it's always an awesome experience to walk along the row of glass-etched tributes to the genre's greatest writers. Authors like Philip K. Dick, H. G. Wells, Ray Bradbury, Issac Asimov, William Gibson and many others in that Hall of Fame have been a great inspiration. It opens back up next summer, so I guess I'll just have to wait and visit again then.

The displays exhibited currently are James Cameron's Avatar, and Battlestar Galactica, as well as the Jimi Hendrix and Nirvana exhibits on the EMP side, which I personally found equally fascinating, but a little off topic for the blog. Avatar runs through September 3, 2011, and Battlestar Galactica through March 4, 2012.

Avatar

To start this part of the discussion, here are Director James Cameron and Actor Giovanni Ribisi talking about the project at the opening of the exhibit in a Seattle Times news video:

Before you even get inside the Avatar exhibit, you're met with a very cool interactive experience. Floating jellyfish-like creatures from the movie waft around on a large screen at the entrance, and react to the shadow you cast on the screen. If you stand still long enough, they will land on your hand.

|

© Jonathan Dalar |

Once inside, you find a wonderful array of interactive exhibits, such as one that allows you to digitally design your own plants that might be found on Pandora.

|

© Jonathan Dalar |

Further into the exhibit are memorabilia from the movie such as handmade models of the Na'vi characters...

|

© Jonathan Dalar |

...and the full-sized Armor Mobility Platform (AMP) suit.

|

© Jonathan Dalar |

Definitely the exhibit that garners the most attention, however, was the interactive motion capture 3D studio, where you can create a clip of yourself, digitally reconstructed to one of two scenes in the 3D Avatar world. It essentially enables you to star in your own 30-second Avatar movie clip...

|

© Jonathan Dalar |

...which of course, I did. The clip instantly uploads to YouTube, where you can have it e-mailed to yourself and view it later. This was my experience.

I'm evidently not quite ready for Hollywood, but it was certainly entertaining and educational.

Battlestar Galactica

This exhibit was my son's favorite part of the trip. He plays the online game, and found it fascinating to see what he was playing "in real life". The only downside for him was worrying about spoiling the ending for himself, which I found quite amusing.

The exhibit features three full-size prop spaceships, probably the coolest part, in my opinion. They're all scuffed up, and look like they've seen their share of battle.

The Viper Mk. II:

|

© Jonathan Dalar |

The Viper Mk. VII:

|

© Jonathan Dalar |

And the Cyclon Raider:

|

© Jonathan Dalar |

In addition to the ships, there are a large number of costumes and other props, as well as interactive exhibits that focus on the shows' concepts and conflicts.

|

© Jonathan Dalar |

|

© Jonathan Dalar |

As an author, another interesting part was the genesis and time lines of the two shows (1978 and 2003). I find myself drawn to the behind-the-scenes work that goes into a project, and the decisions that help shape them into their final versions. Comparing the two shows allows a glimpse into that, or at least it does for me.

Coming in October

And now for a sneak peek at the new horror exhibit, Can't Look Away, opening for members on October 1, 2011, and to the general public on October 2.

EMP's senior curator Jacob McMurray has been putting together the new downstairs horror exhibit for more than a year now, and it sounds like a lot of chilling fun! He's been working with directors Eli Roth (Super 8), John Landis (Animal House, An American Werewolf in London), and Roger Corman (Little Shop of Horrors, Death Race 2000), to curate a selection of films to serve as the launch pad for the exhibition. The goal is to cover a wide range of the genre, looking at different generations and different kinds of films. They'll examine the psychological aspects of it, looking at why we as humans are so fascinated with horror, even though it scares us.

Interactive exhibits will include a scream booth, where you can go into a soundproof booth, watch a horror film clip, where hopefully you'll scream on cue. Your scream will be captured on film and shown on the screen outside the booth. Is it bad that the first thought that came to me about this exhibit is that it will probably appeal most to the husbands and boyfriends in the crowd?

There will also be a shadow monster interactive, where your shadow is captured and digitized, allowing you to turn different body parts into strange grotesques and create monsters from your own human form. I can see my kids spending a while at this part of the exhibit.

For the behind-the-scene geeks in the crowd, there will be exhibits on sound in horror movies, focusing on sound effects and music, and how they are layered together to create the desired effect in the movie. Again, the geek in me comes out. I'm really looking forward to this one.

In addition to the interactive areas, the exhibit will house a number of iconic artifacts, including one of Freddy Krueger's gloves, one of the Jason Voorhees masks from Friday the 13th, and Jack Torrance's axe from Stephen King's The Shining. Perhaps the most exciting artifact is an original manuscript from Bram Stoker's Dracula. Seeing those alone will be worth the price of admission. I know I'll be one of the first visitors there when it opens.

|

© Jonathan Dalar |

Finally I'd like to extend a special thank you to PR Director Anita Woo and the rest of the staff at the EMP. They were very knowledgeable, and more importantly, were willing to take the time to answer all of my geeky questions. For directions to the EMP, tickets and further information, you can visit the EMP's Website. You can also find them on Facebook and Twitter.